AI is no longer a simple grammar checker or paraphraser. It can now write, draw, interact with customers, and so on. We have moved beyond using AI to write something, to many trusting on AI to power entire workflows.

This growing dependency, however, came to a halt when we realized that AI is efficient and scalable, but it is also robotic, stringent, and lacks warmth. But even that problem was resolved the moment tools like Humanize AI came into the picture.

With Humanized AI texts, our AI-generated content started sounding more human, evaded SEO penalties, and connected on a deeper level with our users. However, like any other innovation, a humanizer tool also came with an ethical dilemma:

Is this about better writing or a better disguise? The confusion over whether it is ethical to use AI humanizer tools began to take shape as a matter of opinion.

To help you understand this dilemma better, this blog unpacks the ethics behind humanizing AI content by answering the basics like: when does it cross the line of deception and disguise, and is it all about transparency and clarity? But before we begin, let us understand what humanizing AI really means.

What Are AI Humanizer Tools?

Our dependency on AI-generated content made us realize that speed and correct English are not enough to make content sail through. We needed efficiency in our writing that’s backed by human emotions.

A setup that’s quick and yet understands the nuances of the human conversation. This prompted the need for a tool that can help us keep up with AI’s speed and yet maintain the quality of our content.

This is how tools like Humanize AI came into the picture and promise to refine AI-generated content. The idea behind these tools is simple: refining the obvious giveaways of AI-generated writing. In simpler terms, it is like adding a filter, but for your texts. Consider this example to understand how it works.

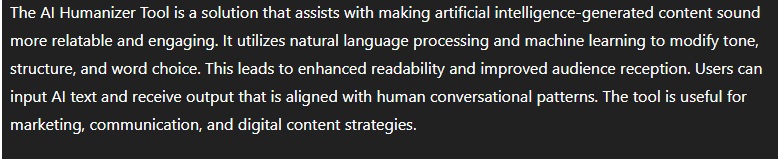

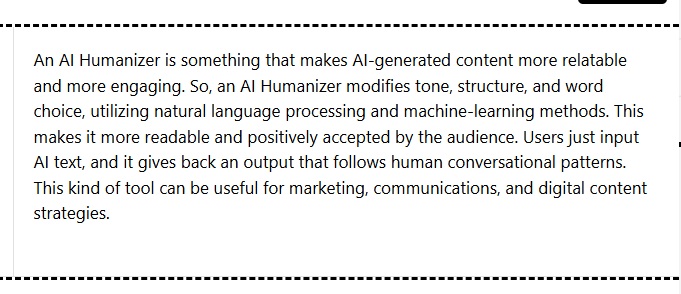

I asked ChatGPT to write a quick introduction for an AI Humanizer tool, and this is how it went:

ChatGPT Sample Text for a sample AI Humanizer Tool

➡️If you notice the text generated by ChatGPT has no grammatical errors, reads perfectly, but at the same time, it is stringent. It reads like an instructional manual more than a sales pitch. As a marketer, you cannot rely on a pitch like this, especially for a product that promises to humanize content. Moreover, there is also the concern of SEO penalties and the need to form a genuine connection.

This is where a tool like Humanize AI comes in to take care of the loose ends. As you copy and paste this ChatGPT-generated text, Humanize AI will take care of all the robotic residue, and it will read more fine with human nuances like this:

The reason why a tool like Humanize AI is required is evident from the above sample. You will notice that it does all the following things in just one click:

✅ Makes the ChatGPT-generated content more fluid to read.

✅ Adds human nuances to make it sound more real and yet assertive.

✅ Assures the reader that it knows about the pain points to help from a genuine connection.

✅ Takes care of the grammar and SEO checkpoints with ease.

Why Are AI Humanizers Gaining Popularity?

Initially, with AI, the workflow was simple: we directed the prompt, and the AI generated the results. But things started to change drastically when every other text began to sound the same, and we started noticing the invisible risks of AI content.

There was a time when we were at crossroads where we had to protect our integrity as marketers, students, or journalists, and be transparent about the use of AI. As AI-generated writing flooded the digital space, AI detectors like GPTZero, Turnitin started flagging anything and everything.

A quick back story: With suspicion came caution, and it became hard to navigate the digital space even when someone was solely relying on human input. Even human-written text began to get flagged as AI-generated if it was polished, structured, and focused solely on the technical aspects.

This growing sense of certainty to navigate around the false positives, along with the rising demand for content that feels human, paved the way for AI humanizers to take control.

As it was not about writing grammatically correct sentences anymore, the need of the hour was to be relatable and emotionally intelligent. This put pressure on teams and setups that relied on AI to optimize their work, especially for non-native English speakers.

🔊 Quick Fact: A HubSpot study found that 86% of marketers review and edit their AI-generated content owing to the hallucinations and add a more human feel.

In this way, tools like Humanize AI serve a dual purpose and have gained their much-deserved fame. They help meet the standards set by Google and SEO. At the same time, they give non-native speakers an upper hand, allowing them to edit their work and maintain a certain level of fluency.

Is It Ethical to Use AI Humanizers?

The conversation around the ethical use of AI humanizers is more layered than a yes or no question. What truly matters is how the tool is being used and for what purpose. If we need to make a judgment on ethical grounds, the question we should be asking is our way of understanding the tool.

Are we relying on AI Humanziers to communicate better, or are we hiding something from our readers? There is a fine line between convincing and deceiving our readers, and understanding this set boundary is what makes all the difference.

Role of Intent: Deception vs. Accessibility

In a marketing setup, a tool like Humanize AI can help optimize your content generation process and reduce the pressure on your existing manpower. Moreover, it lets you operate on a massive scale without worrying about the budget.

Think about it this way: with one tool, you can make do for a writer and an editor who never gets exhausted. You can generate as many articles as you can on a given day and make sure your workflow is always streamlined. But the moment you rely on it for research and let go of checking the facts, the misinformation—that’s when you invite trouble.

From a more sensitive perspective, like academia, journalism, or publishing, you cannot really pass machine-generated content as your own. Where transparency, authorship, and accountability are the key principles, claiming authorship of machine-generated content is ethically and morally dangerous.

But you cannot let go of this setup as a whole if we intend to be an inclusive workspace. As we have non-native speakers, people with neurodivergent issues, and so many others who rely on a tool like this to help put their point across.

💡Quick Fact: Ultimately, protecting the ethical standpoint of humanized AI text lies within you and your approach towards it. But navigating this space becomes much easier when you are clear about what is considered ethical and what is not.

✅ When It Is Ethical

Ethics in terms of an AI tool is mostly about the psychology of its use. If you want to know whether you're right to rely on a humanizer tool, you first need to consider the implications of using it.

- Correcting False Positives: What’s worse than violating AI usage rules? It's being prosecuted in the pretense of using one. There are times or situations where the level of our writing can surpass the AI standards. As flattering as it may sound, it can create a lot of confusion as it is set to trigger the AI detectors.

➡️ In this, relying on AI humanizers like Humanize AI to save the day is justifiable and, most importantly, a necessity. Tweaking the wording to bypass detection for an original piece of work is completely acceptable.

- Improving Accessibility and Inclusivity: Everyone should have the basic right to express themselves. But sometimes the tension of being a non- native speaker or someone who is neurodivergent takes away the essence and emotion.

➡️ If you consider a situation like this, relying on AI helps make our space more inclusive. If the tool is used to improve clarity, tone, or fluency without misrepresenting the author's intent, we create a safe, creative space for everyone.

💡Quick Fact: A study published by Cornell University suggested that GPT detectors are biased against non-native English writers.

- Transparency and Disclosure: You can rely on Humanize AI in a sensitive setup, even if you are not someone with special needs or great writing capability. All you have to do is make sure you are open about it.

➡️ The moment you disclose the presence of AI, it helps you avoid any undue upper hand that might clash with your ethics and morals. Moreover, it is only then that the tool is supposed to serve its true purpose rather than as a quick shortcut.

❌ When It May Not Be Ethical

It is very easy to cross the line with AI, so you have to be mindful of how you take control of the narrative. There needs to be a full disclosure of how and where AI or humanizer tools are used, especially if the content is being presented as original or entirely human-written.

- Using it to outsmart the classroom: If you are using AI to have an upper hand over other students, that’s a huge problem. When you rely on tools like Humanize AI to refine AI-generated essays and call them your own, you disrupt the system. It not only hampers your learning process but also compromises academic integrity.

➡️A situation like this not only invites an ethical dilemma for you, but it can even have practical repercussions. You might be penalized for cheating or can even be expelled, depending on the situation.

- When it misleads audiences: Brands that rely on AI to craft “heartfelt” sob stories to get the emotional vote use the tool wrong. They manipulate emotions in the name of marketing and also disrespect the foundations of an AI Humanizer. A personal life story that goes out to thousands of readers and users shouldn’t be a fabricated lie spun by AI.

➡️ Imagine buying from a brand that is known for its heartfelt anecdotes and getting to know later that it was all machine-generated. You will not only feel betrayed but also have unknowingly given the brand an unfair advantage over all its competitors.

- When it violates platform or publisher guidelines: Many renowned platforms nowadays are stringent about the use of AI. They have a specific standpoint on artificial intelligence and do not appreciate its usage in most cases. The moment you rely on a tool like Humanize AI to pass an AI-generated content as your own, you violate the ethical and moral guidelines.

➡️ Bypassing AI guidelines by using a humanizer tool doesn’t make the content acceptable, but it makes you guilty of deception. Yes, you will surely make it harder for the detectors to flag your content, but eventually you will lose its entire purpose. Moreover, the moment all this comes to light, you can be directed to take down your article or even face a permanent ban, apart from all the moral hurt and damage.

How Do AI Detectors Work—and Why Do They Fail?

Imagine a strict invigilator for an exam; they are always vigilant and will never allow you to get into the hall without a thorough check. That’s mostly what an AI detector does: it scans your content for AI’s presence.

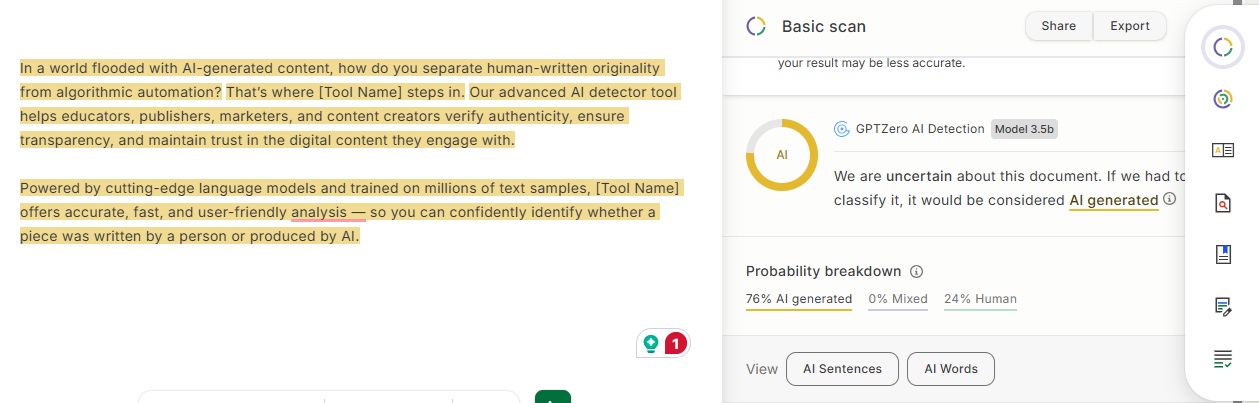

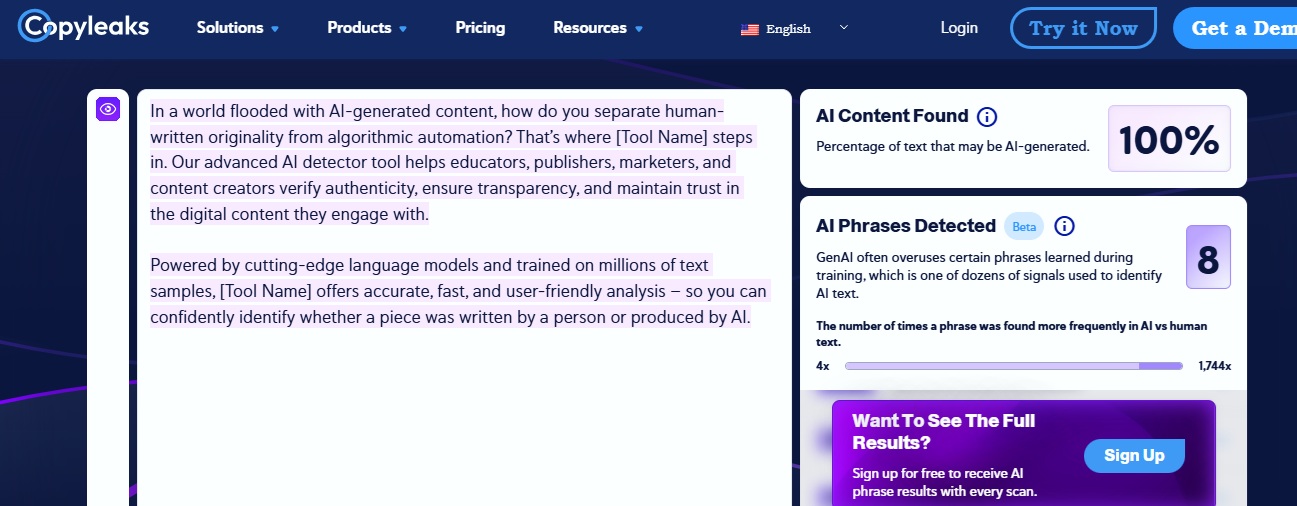

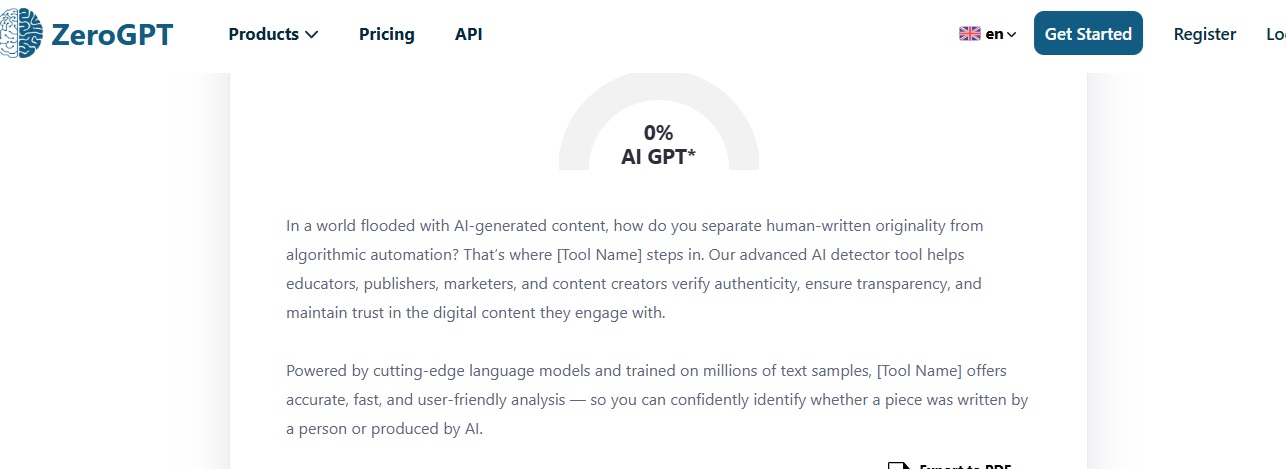

The way they are structured to function is also the main reason, sometimes they fall flat on their faces. The reason is simple: a detector works by identifying patterns and relying on its datasets. Here’s a quick example of the same with a ChatGPT-generated content.

- Result Generated by CopyLeaks

- Result Generated ZeroGPT

🚨At this point, it is only concerning to see how various detectors perceive AI-generated content differently. It is a clear example of how relying on detectors can lead to confusion. If the popular tools in the market are unable to identify basic ChatGPT-generated content, you can only imagine how it treats a humanized one.

💡Quick Fact: A study by The Washington Post revealed that up to 50% of human-written texts were wrongly flagged by Turnitin.

But why does this happen? Let’s find out.

The concept of an AI model is similar to a metal detector. It tries to identify patterns and structures in your sentences to highlight the presence of AI. This is why, if you have impeccable writing skills, you might just be accused of using machine-generated content.

- Patterns & Sentence Structure: Like a metal detector uses electromagnetic induction to scan for metals, an AI detector scans your sentences for AI. They are trained on AI-generated content and datasets that help them recognize a pattern in any given piece of text. Not only that, some are even trained on human-generated content to ultimately establish the difference.

- Perplexity: Perplexity is a scale that measures the anticipation of an AI detector as it tries to predict the given sentences. To put it in simpler terms, if the words and sentences are easy to guess, the perplexity is low. AI-written text usually has low perplexity because it follows patterns the model knows well.

- Burstiness: Burstiness is the measure of the burst of complex sentences for a given writing. With humanized writing, you will find a mix of short and long sentences, casual and formal tones, whereas AI has a rigid and flat tone. This is why a low score on the burstiness scale is always a red flag.

💡Now, we are back to the question that started this discussion and paved the way for a tool like Humanize AI to establish its identity. What happens when your writing style is all about talking to the point with fewer layered nuances?

➡️The AI detectors cannot fully understand writing intent or tone. So, when your style is straightforward and minimal, you get in trouble. You become a victim of false positive detection, and the detectors think your work is AI-generated.

Where and When Is It Ethical to Use AI Humanizers?

Now we know that using AI Humanizer is a necessity, and sometimes it can help us with human-generated content, too. But it is human nature to always keep looking for an easy access to a point that sometimes crosses ethical lines.

So, to avoid any such drastic implications, here is a quick recap of how to maintain the ethical principles as you rely on a tool like Humanize AI.

- Marketing Copy: If you are promoting a product and writing about it, you rarely need to take care of authorship concerns. All you have to do is modify tone, structure as you take care of engagement. In a setup like this, it’s all about conversion using any form of content rather than focusing on creative credits.

- Internal Documents, Early Drafts, or Brainstorming: An internal set of documents will never see the light of the world. As it is meant for discussion and to smooth out rough ideas, clean up language, or generate better phrasing. So, using a tool like Humanize AI in this setting is about having better support than replacing the human input altogether.

- Accessibility Support (Especially for ESL Users): Not everyone has the capability to write fluent English, even for drafting a letter. It can be due to various issues like being a non native or being language challenged. So, relying on a tool that helps refine the english language to sound more realistic is more of a practical hack to sail through.

- Co-Writing Tools (AI as an Assistant, Not a Replacement): There can be situations where you don't like your own writing. Or, as we have seen how sometimes the AI detectors falter massively. This is where it is ethically safe to rely on a Humanizer tool. As you are not trying to take away someone’s credit, but refining and driving your own ideas.

A Balanced Approach: Transparency and Intent

At this point, AI has become an integral part of our workflow with its speed, efficiency, and so much more. So, it is only obvious that it will raise questions on its usefulness, the true intent behind its usage, and so on. These conversations are important. But rather than framing them as warnings, we should see them as opportunities to shape a more transparent, empowered future with AI, which allows for an inclusive space for all.

However, navigating all this is easy as long as we keep reminding ourselves we are in control of deciding the extent of AI’s involvement and ensure a safe and ethical workflow. When guided by intention and awareness, AI can be a powerful collaborator, not a threat and here's how :

- Encouraging Disclosing AI Assistance When Needed: When we are open about the use of AI, it's only then that it won’t be perceived as a threat. As the present competitive market doesn't allow us to drop our guard, and any undue advantage is having an unfair upper hand. The moment we are open about our dependency on artificial intelligence, it sets the right standard for everyone who is involved.

- Watermarking, Disclaimers: The moment we encourage responsible use, there will be less of dilemmas and ethical concerns with the use of AI. When tools and platforms offer built-in options for responsible use, it helps the audience to make decisions without any prior influence. This also allows a safe space for creators to use AI in a way that aligns with their values be it improving accessibility, saving time, or experimenting with tone and style.

- Promote Ethical usage: The popular AI platforms should talk about the intent behind their tool, regardless of their nature. This helps the audience to understand that there is always a fine line between clarity, accessibility, and polishing and outright deception or manipulation.

Final Thoughts: Ethics in the Age of AI Writing

There is one thing common between AI enthusiasts and people who reject the use of AI: it's the overall well-being of their content space. People who reject AI are scared that it might have real-world ethical repercussions. They fear misinformation and loss of originality due to job displacement and bias.

On the other hand, those who support AI believe it can empower creators, enhance accessibility, and increase productivity. However, AI supporters see things differently. They see AI as an ally that can break down barriers, increase content accessibility, and boost productivity.

In the age of AI writing, ethics isn’t about rejecting technology; it's more about how we use it. There is no rigid answer to the question “Is It Ethical to Use AI Humanizers or Tools That Humanize AI-Generated Text?” As it is more contextual and is dependent on your intent to use AI.

If you wish to manipulate, it reflects poor judgment on your end compared to someone using the same tool to improve clarity or accessibility. AI is neutral. The ethics emerge from how we direct it.